Computer Vision

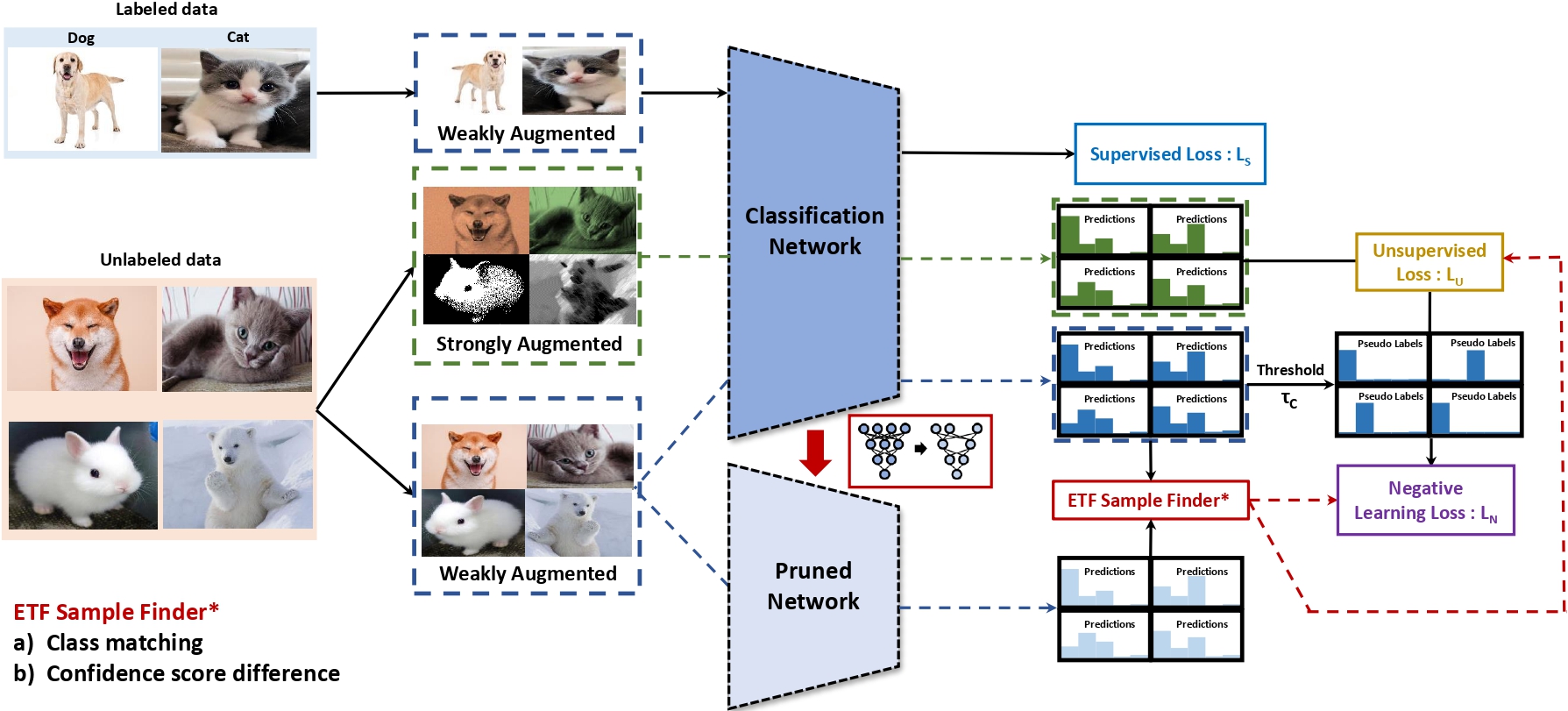

In the field of computer vision, we aim to capture and distinguish meaningful features from diverse images and incorporate them into model learning. Such approaches are applied across areas including knowledge distillation and semi-supervised learning, and have proven effective in various vision tasks such as classification and object detection.

Multimodal Model

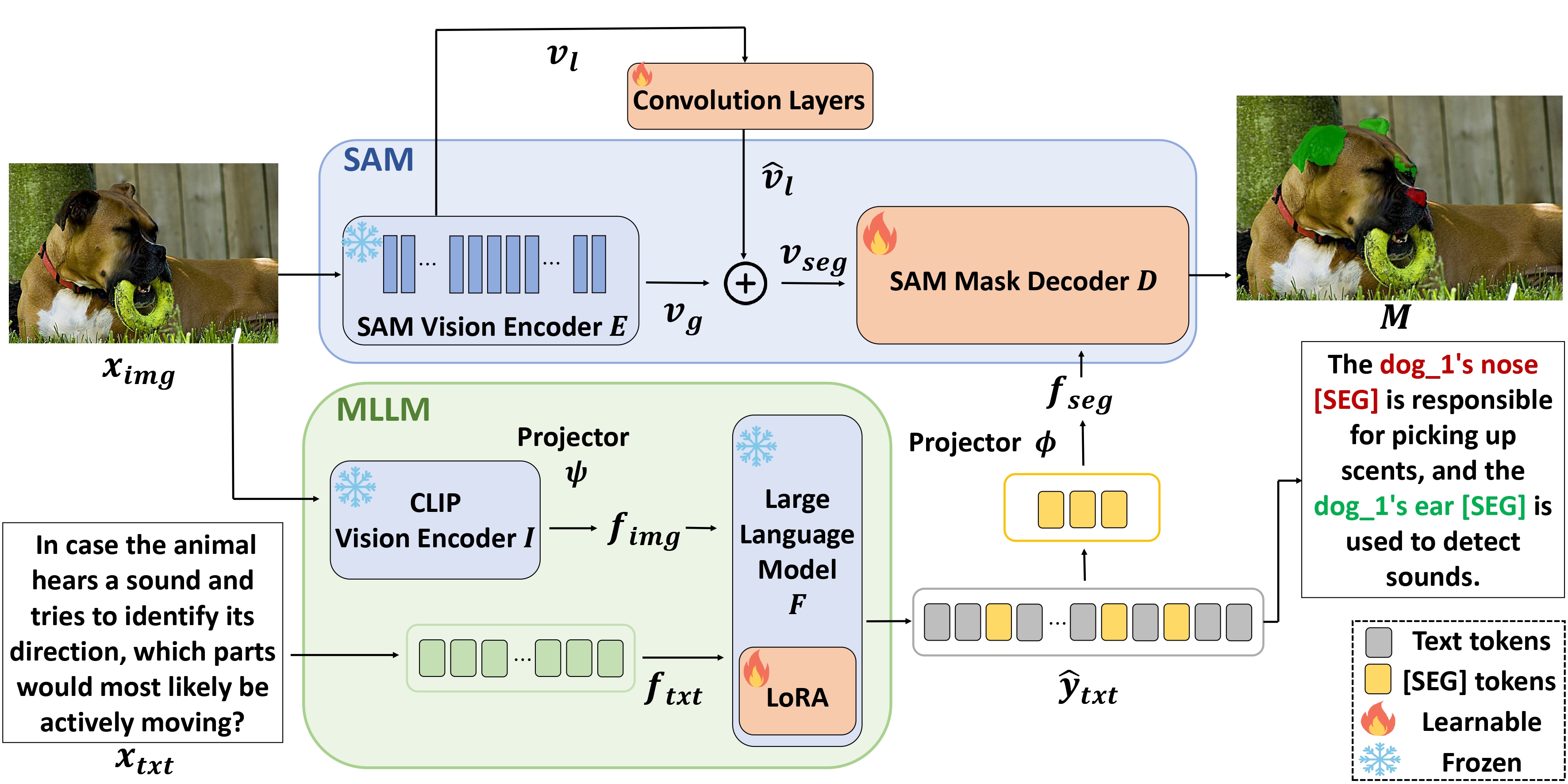

The progress of multimodal research, particularly vision-language models, has driven much of the recent development in human–AI interaction. Our work focuses on building AI systems that can better understand and reason over diverse forms of text provided by humans.

Time Series Analysis

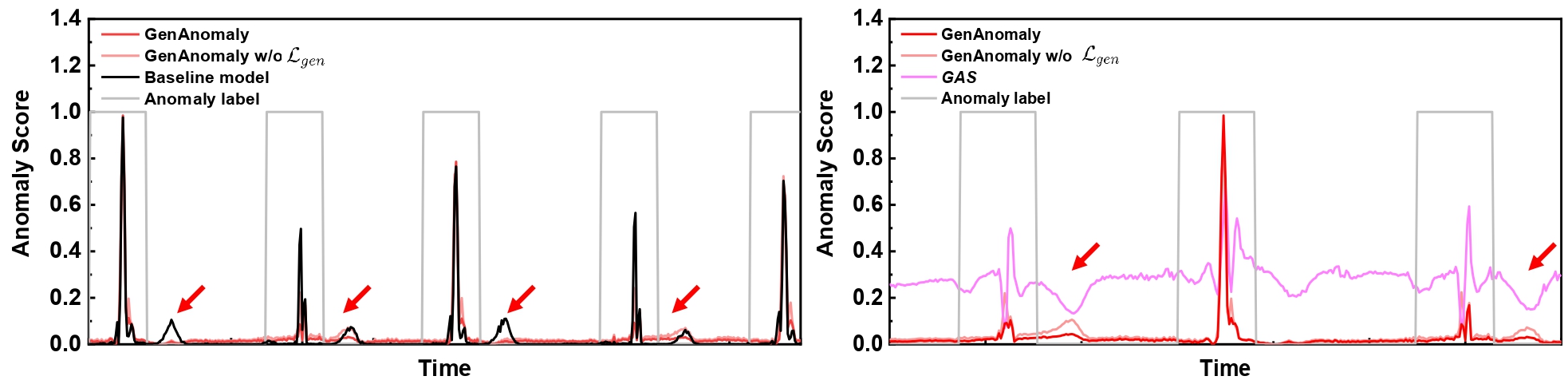

We investigate time series analysis methods that integrate sensor data from robots and other sources, with applications in forecasting, anomaly detection, and beyond. Our research explores the interactions and combined effects of diverse time series, enabling applications in domains such as smart factories and finance.

AI for Physics

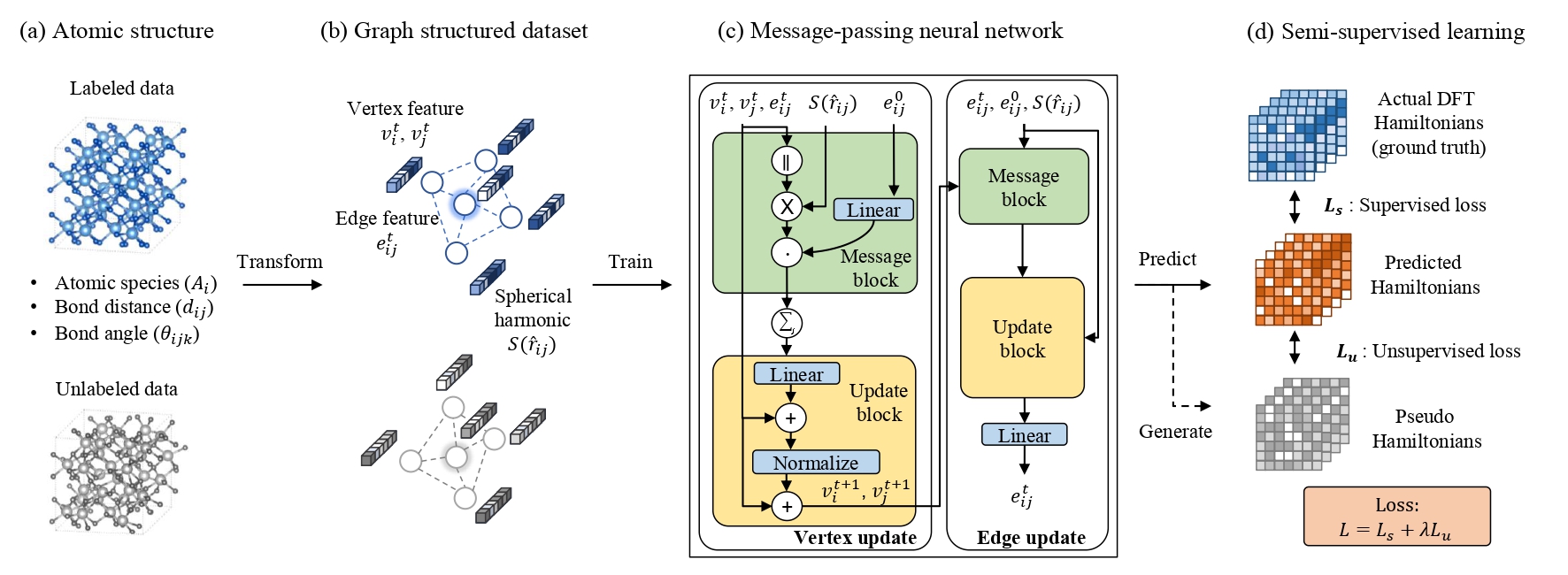

First-principles quantum simulations play a critical role in accurately predicting the physical properties of semiconductors, especially given the ongoing trend of device scaling. We develop deep learning frameworks that can complement and accelerate such simulations, enabling more precise predictions of semiconductor properties.